If you are interested in any of the topics listed below or in the general area, please contact me (Nils Jansen). My webpage can be found here. I'm always happy to discuss directions and topics, and this webpage may often be outdated due to recent research developments.

We conduct the supervision of Bachelor and Master theses very interactively. Often, one or more PhD student is involved, and in regular meetings with all students there is the opportunity to give short presentations and discuss vividly. Some of the topics may be co-supervised with Jurriaan Rot or Sebastian Junges.

With the rapidly growing application of artificial intelligence and machine learning in social life, considering for instance autonomous systems such as self-driving cars, the need for verified or dependable guarantees against potentially fatal accidents is self-evident. An important question is then, how systems can be made safe. A very nice article discussing challenges and open problems can be found here. If one of the open problems is of interest, let me know.

Generally, we offer topics within the following exciting directions. In most of the directions, we have both Bachelor and Master topics available, and there are deep theoretical challenges as well as rather practical topics that mostly involve an implementation.

We are interested in the correctness and robustness of neural networks. Join us to write your thesis on a very recent and interesting topic in collaboration with TNO or the University of Antwerp.

All topics would involve at least a lightweight implementation.

The real world is inherently uncertain. We look into formal models for agents that have to operate under various sources of such uncertainty, for instance in the form of Markov decision processes.

Topics can be very theoretical or very practical. We use techniques from robust optimization, sampling, abstraction, and many more.

We offer multiple theses as part of industry projects, for instance with the companies Alfalval, Rolsch, Nexperia, or Canon.

As an example for a project, within PrimaVera you can work on the predictive maintenance of high-tech systems.

If you have a company and are looking for a supervisor, feel free to contact me and we can see if that fits.

In recent years, the interaction between humans and robots has become more and more relevant to our daily life. The task here is to formalize correctness or confidence measures in the safe interaction between humans and robots.

The project builds on prior work and may be performed with experts from other universities. See this overview article to get an idea.

In order to verify an AI system, a model is needed. However, in most cases, such a model is not directly available. This project will investigate learning techniques for deriving a model that is amenable to formal verification techniques.

A particular focus can be learning state representations from Atari games and verifying that a player will always make a safe choice.

To learn more, have a look at https://arxiv.org/pdf/1906.08226.pdf and https://arxiv.org/pdf/1806.01363.pdf, or Frits Vaandrager's famous paper on model learning.

Real-world robotic systems carry problems that go beyond the current capabilities of AI. We aim to enable machine learning methods for continuous and high-dimensional state spaces for systems that exhibit various types of noisy sensors. As an example, see this or this paper.

I am an ambassador of Radboud's Green IT center. Within this program, my personal goal is to derive IT solutions that help towards more sustainability, for instance via improved ressource use or optimal power management for renewable energies.

An important part of our research is to work with exciting examples, case studies, and driver cases. If you are interested in finding new cool examples with us, there are always topics available.

The task could for instance be to think of a nice problem, and then model it in a formal way so it can be analyzed with our methods.

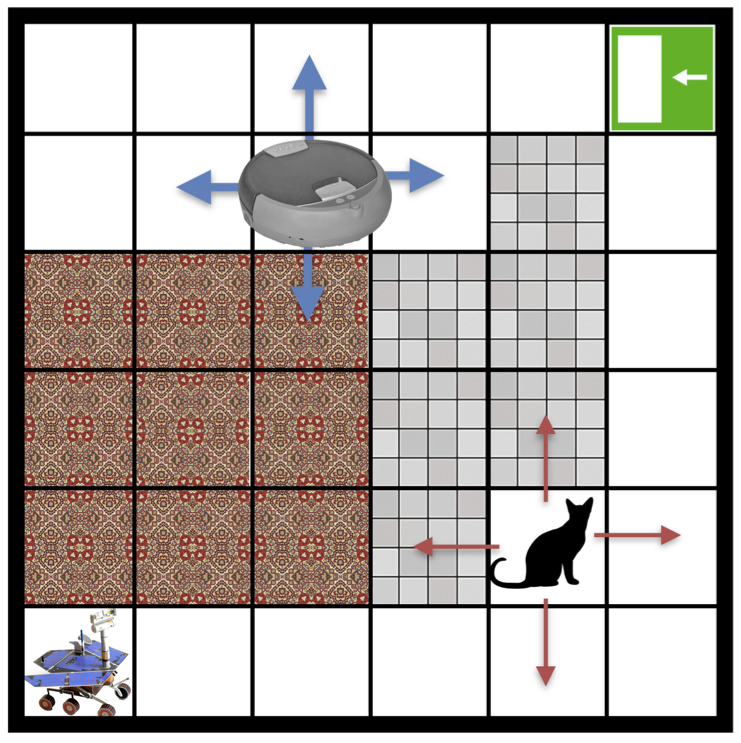

See below a few examples of our case studies: satellite motion planning, aircraft collision avoidance, and PacMan respectively.

Have a look at the topics that we did so far!

The concrete topics below may be partially outdated, but feel free to have a look or contact me about them!

In machine learning algorithms, a system model is learned based on observation of the real world. Upon further observation, this model may be subject to change. The problem of applying formal verification to such a model is that it is not a well-defined and fixed model of the system at hand. This project proposes to robustify a current learned model against further changes based on future observations. If it can be verified, that given system specifications are satisfied for this robust model, all future observations that are consistent with the intervals will not change this fact.

The project will involve the development of underlying formalisms and a small prototype implementation using Python libraries for reinforcement learning and model checking using the probabilistic model checker Storm.

Take a problem that is sufficiently modeled by a POMDP, for instance, an agent navigating in a partially unknown environment. Intuitively, the agent does not really know, in which state the system is, in fact, there is a probability distribution over possible states, called the belief state. This yields an infinite number of possibilities.

The task is to make use of human capabilities in inferring from partial observation to resolve or reduce the belief space of the POMDP. We propose to immerse a human either actively or as an observer into a verification scenario. Possibilities range from a sophisticated virtual reality immersion to a plain 2-D representation of the scenario. Check this article on gathering human feedback from the OpenAI blog.

Reinforcement learning largely suffers from the problem, that it is basically uninformed exploration of the state space of a system. We want to study the exploitation of counterexamples to identify corner cases and critical parts of state spaces to guide learning. For applications in Deep Neural Networks, check this article.

Verification for POMDPs is not feasible in general. We propose to use so-called permissive schedulers computed on the observable part of a POMDP to allow for as much freedom in decision making as possible.

In a POMDP exploration scenario such as typical motion planning for robots, this will reduce the number of situations, where the exact system state needs to be computed: Consider a situation where the belief state is a distribution over a certain number of states. In other words, there are many possibilities of states, the system can be in. Usually, in order to make a decision, this needs to be based on all possibilities. If now each state has a precomputed permissive choice that agrees with all states, no further computation is necessary.

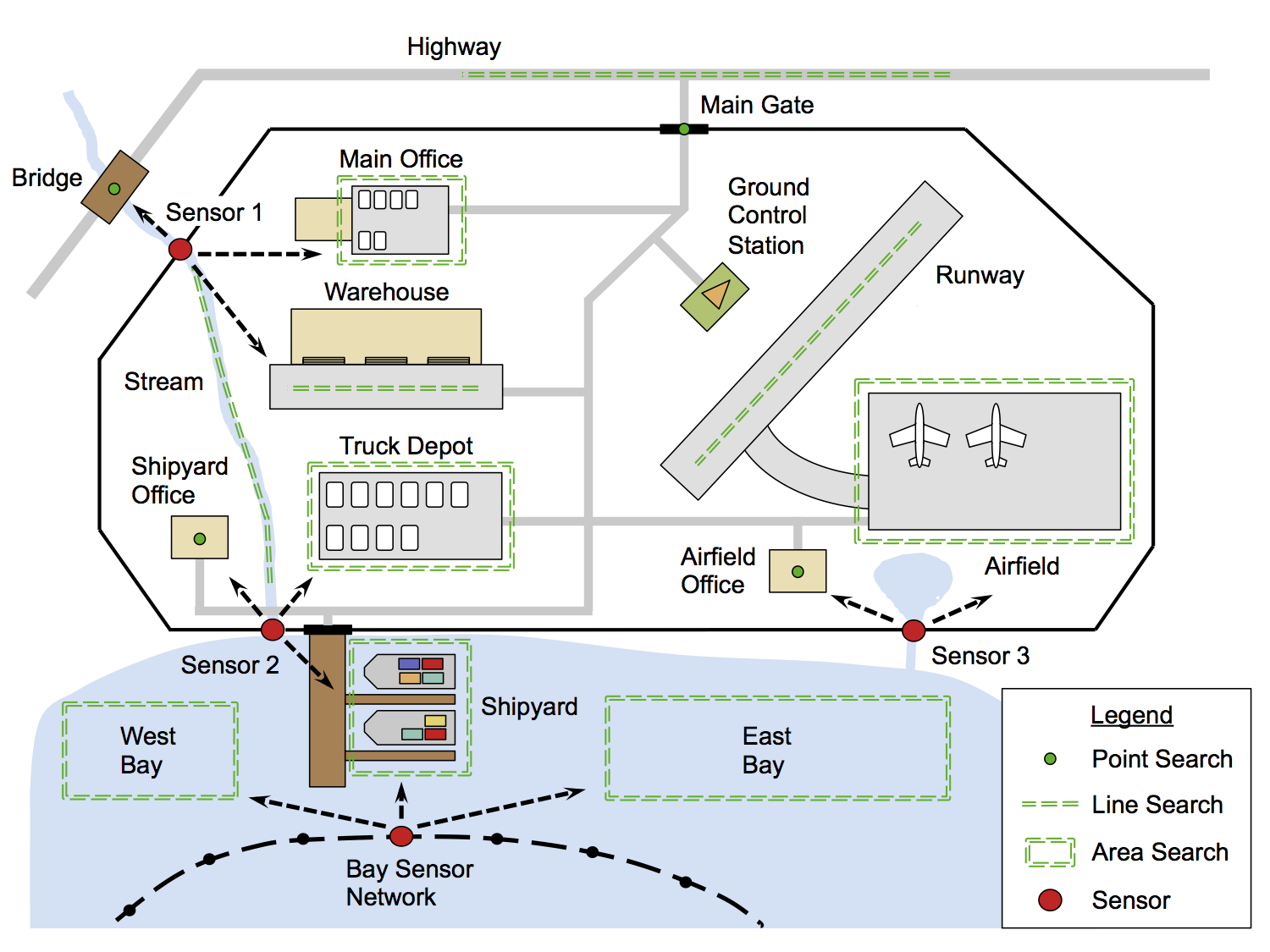

This very broad and practical project aims at finding and modeling typical robotics case studies that can be formally verified. Here, we are not fixed on probabilistic systems but also care for hybrid systems or even plain transitions systems. Many case studies may for instance be found in this book about probabilistic robotics by Sebastian Thrun.

Many applications are structured in the sense that the underlying systems exploit a certain structured in the sense that there exist multiple but finitely many pre-specified options for the system configuration.

Imagine a system incorporating surveillance of the environment using a number of sensors. Each of these sensors has a fixed probability for false alarms or misclassification, depending on the quality of the sensor. Increased quality may come at an increased acquisition or maintenance cost. We propose to model such a given structured scenario as a parametric Markov decision process, where the several fixed options influence both the cost and the system dynamics.

The goal is to synthesize a system configuration that adheres to given cost and safety specifications. The thesis topic involves work on an existing and practical case study and exploitation of current theoretical achievements.

Traditional schedulers (also referred to as strategies or adversaries) resolve all non-determinism in a system such as Markov decision process (MDP). When probabilities are not fixed but given by an interval range of probabilities, synthesis methods require permissiveness for a scheduler in the sense, that only critical behavior (that is, non-deterministic choices) is excluded.

The goal is to work on a case study focused on autonomous systems such as self-driving cars, where schedulers need to be synthesized that guarantee safe behavior while allowing for as much freedom as possible. Prior work can be found here.

Many verification problems for parametric probabilistic systems can be cast as a nonlinear program. Solving such programs is in general infeasible. However, for a certain subclass, so-called convex programs, efficient methods exist. In many applications such as networks, planning problems, and control theory in general, it is even stated that only convex problems are well stated, consider this great book by Stephen Boyd.

Some old topics where we could still find relevant thesis topics are below.

The famous game 2048 exhibits probabilistic behavior. The task is to use machine learning and verification methods to compute an optimal AI for the game, or to prevent humans from making disastrous moves. See here for instance. Topic can be rather applied or very theoretical.

POMDPs are extremely important in many robotics and AI applications. As in the standard AI textbook by Stuart Russell and Peter Norvig: Partially observable MDPs ... are usually viewed as much more difficult than ordinary MDPs. We cannot avoid POMDPs, however, because the real world is one.

The task is to work on methods to compute strategies with finite or no memory, which is (contrary to infinite memory) still feasible but not investigated very much. Approaches can range from dedicated branch and bound methods (algorithmic), mixed-integer linear programming (optimization), or SMT solving (satisfiability). All approaches that lead to convincing results are expected to be able to lead to research publications. Topic is rather theoretical with some practical aspects.

We seek methods that help a machine learning algorithm to solve PAC-MAN. See for instance the videos uploaded here for a new result we recently obtained. The paper is available here. Approaches range from model checking, machine learning to SMT solving. Topic can be rather applied or very theoretical.

Building on prior work, this project concerns the application of several concepts from convex optimization to well-known verification problems for parametric probabilistic systems that cannot be solved efficiently.